-

-

Notifications

You must be signed in to change notification settings - Fork 17.2k

Description

Search before asking

- I have searched the YOLOv5 issues and found no similar feature requests.

Description

An option to train with relu activation.

An option to export model without final permutation and translation layers

Use case

With these modifications, the model is easier to be ported to edge devices and with better performance, such as google's edgetpu and rockchip's npu

Additional

YOLOv5 is the most easy tool I have tried to train a detection model (I've tried yolov4, mt-yolo, yolov6, ssd-mobiledet, yolov5-lite, ...).

However, for efficient inferring on edge device (google edgetpu, rockchip rk-xxxx, intel movidius, ...), we need to modify the activation function (to relu) and remove some layers. With these modification, we've got good results, for example we can run a 6-classes yolov5n model on rk-3568 with 40 fps and on google edgetpu with 200 fps (including c++ implemented post processing such as nms).

It's not hard for user to modify the source code to achieve these. However, I feel it worth being added to the official codes.

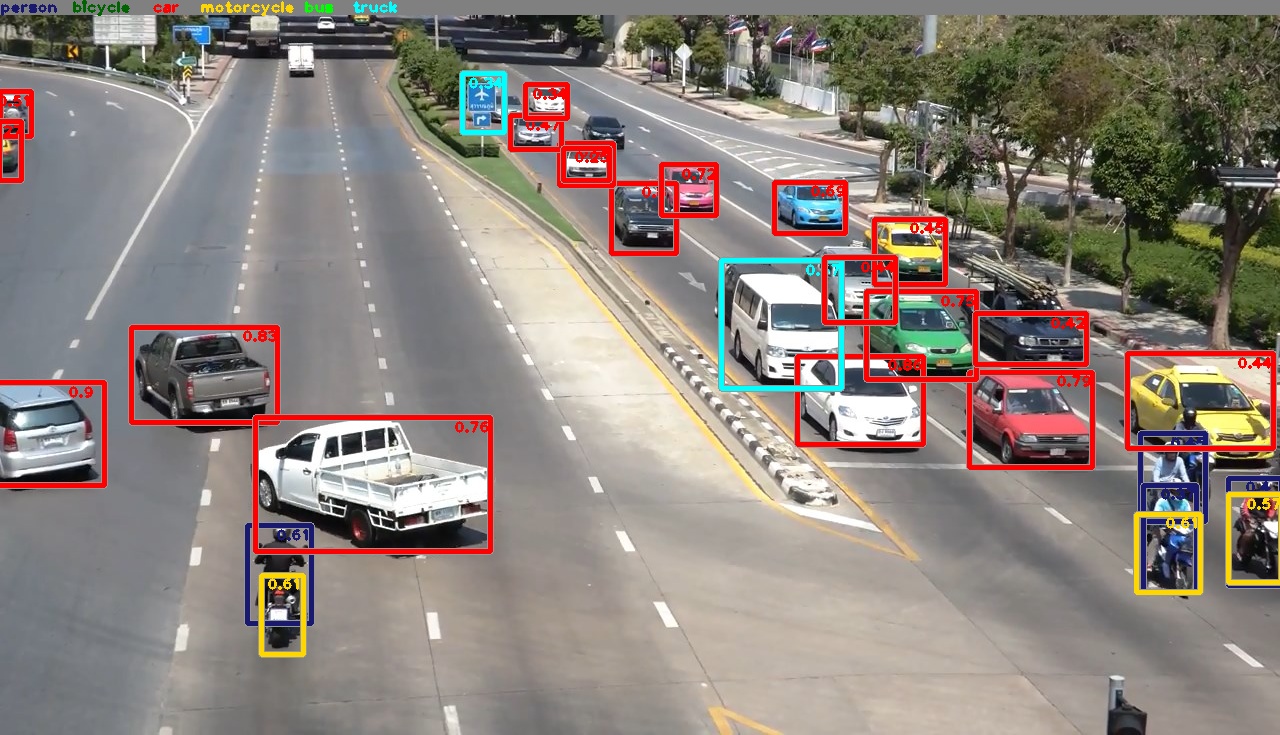

This is an example to run our model on google's edgetpu.

Are you willing to submit a PR?

- Yes I'd like to help by submitting a PR!