-

Notifications

You must be signed in to change notification settings - Fork 940

Closed

Labels

Description

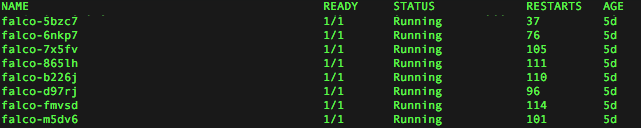

Hi, I am testing falco daemonset on our GKE cluster in GCP, it works great except that after a week of running, all the the falco pods have been restarted hundreds of times and it’s probably clogging the kublet’s rate limit to cause new pods from properly running (they kept crashing, delete falco daemonset solves the issue).

Perhaps the default rulesets are probably too intensive and too noisy for a typical k8s environment. I’ve read through falco rules wiki page but nothing jumps out for me to solve them. Can anyone give me some additional pointers to fine tune those default rules? Where should I start? Thanks.

K8s info:

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.0", GitCommit:"ddf47ac13c1a9483ea035a79cd7c10005ff21a6d", GitTreeState:"clean", BuildDate:"2018-12-03T21:04:45Z", GoVersion:"go1.11.2", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"10+", GitVersion:"v1.10.11-gke.1", GitCommit:"5c4fddf874319c9825581cc9ab1d0f0cf51e1dc9", GitTreeState:"clean", BuildDate:"2018-11-30T16:18:58Z", GoVersion:"go1.9.3b4", Compiler:"gc", Platform:"linux/amd64"}

kubectl logs && kubectl describe pod Logs: https://gist.github.com/ye/0f4195faf3f80e7d4c8d618ae6b590b2