-

Notifications

You must be signed in to change notification settings - Fork 5.9k

[PHI]Change feed_op to phi kernel #49116

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[PHI]Change feed_op to phi kernel #49116

Conversation

|

你的PR提交成功,感谢你对开源项目的贡献! |

| } | ||

|

|

||

| void OperatorWithKernel::CheckWhetherPreparePhiData( | ||

| const VariableNameMap& innames, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

函数没有用到 innames 这个变量

This function has not used parameter innames.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这个是预留的参数,后续要把其他地方的prepare_phi_data整合到这个里边会用到

paddle/fluid/framework/operator.cc

Outdated

| if (HasSameTensorType<phi::DenseTensor>(phi_output, var_output) || | ||

| HasSameTensorType<phi::SparseCooTensor>(phi_output, var_output) || | ||

| HasSameTensorType<framework::Strings>(phi_output, var_output)) { | ||

| need_prepare_phi_data_ = true; | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

只要有一个 tensor 的类型相同,就需要准备 phi_data 吗?与前面的注释不符

// Check each tensor in KernelContext, if there is a tensor that has

// different type with variable. The PhiKernelContext need be reConstructed.

Do we need to prepare phi_data as long as there is a tensor that has the same type as Variable? This is inconsistent with the above comments.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

谢谢,这里确实有问题,我修改一下

|

|

||

| void SetFeedVariable(Scope* scope, | ||

| const Strings& input, | ||

| const std::vector<std::string>& input, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里为什么输入又换成了vector<std::string>?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这个函数衔接Python传入数据,Python传入的list string无法转换成Strings,这里需要替换成vector<std::string>

paddle/fluid/framework/operator.cc

Outdated

| const auto& phi_kernel_context = impl_->getKernelContext(); | ||

| size_t phi_tensor_index = 0; | ||

| // Check each tensor in KernelContext, if there is a tensor that has | ||

| // different type with variable. The PhiKernelContext need be reConstructed. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

reConstructed->reconstructed

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ok

paddle/fluid/framework/operator.cc

Outdated

| if (phi_kernel_context->OutputsSize() >= phi_tensor_index) { | ||

| need_prepare_phi_data_ = true; | ||

| return; | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里的判断以及处理逻辑和prepare_phi_data有什么关联?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

缓存的数据大小已经和现在传入的tensor大小不一致了,说明需要重新构造KernelContext,所以需要处理prepare_phi_data

paddle/fluid/framework/operator.cc

Outdated

| auto var_output = scope.FindVar(var_name); | ||

| auto phi_output = phi_kernel_context->MutableOutputAt<phi::TensorBase>( | ||

| phi_tensor_index); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

从map中取值和从vector中按顺序取值可以正好一一对应上吗?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这个应该是能对上的,std::map是有序的,此外我们构造KernelContext使用的RuntimeContext也是使用的这个方式迭代拿到的输入输出

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里是不是对不上,咱们kernelcontext拿的KernelSignature返回的input和output,如果是直接用op的Input和Output,可能会对不上

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

谢谢,这个确实有问题,已修复

| // stored in a vector as PHI kernel argument. | ||

|

|

||

| template <typename T> | ||

| class PHIVector : public phi::ExtendedTensor, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

好的,谢谢

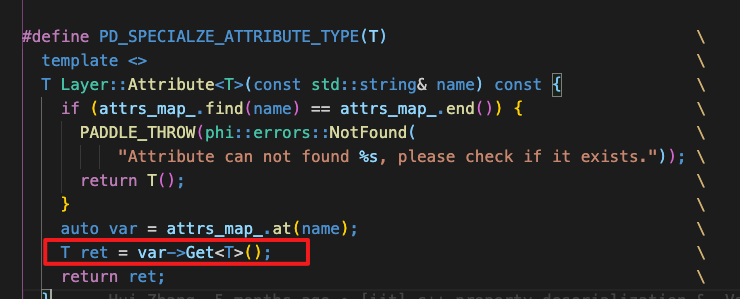

paddle/fluid/jit/layer.cc

Outdated

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<int>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<float>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<std::string>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(framework::Strings) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里的宏看着是注册Attribute类型的,Strings目前是不应该算Input类型?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

原因是这里的attribute实际保存的是variable类型的数据,会和framework的Strings类型存在交互

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里和feed op的改造有关吗?jit应该是留杰他们单独整的一套吧,attribute类型里原先就有std::vector<std::string>,我们好像不应该把这里改成var系列的,如果真有必要的话,也得是平行再增加

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已和留杰对齐后取消jit的修改

| void operator()(const phi::DenseTensor &in_tensor) const { | ||

| phi::DenseTensor *out_tensor = out_var_->GetMutable<phi::DenseTensor>(); | ||

| void operator()(const phi::DenseTensor& in_tensor) const { | ||

| phi::DenseTensor* out_tensor = &(out_var_.Get<phi::DenseTensor>()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里为什么换成了Get,如果是const类型的tensor后面是怎么修改的?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里是个语法细节,首先需要明确,这里的out_var变量已经不是之前的variable指针,换成了RawTensor实例对象(根据威行建议,会把名字再换回RawTensor),这里operator()函数是const成员函数,实例对象必须调用const成员函数。RawTensor的GetMutable函数是非const的,这里无法使用,必须使用带有const的Get函数

| void operator()(const framework::Strings &in_str) const { | ||

| framework::Strings *out_str = out_var_->GetMutable<framework::Strings>(); | ||

| void operator()(const framework::Strings& in_str) const { | ||

| framework::Strings* out_str = &(out_var_.Get<framework::Strings>()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

同上

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

同上

| paddle::framework::EmptyGradOpMaker<paddle::imperative::OpBase>, | ||

| paddle::operators::FeedOpInfoMaker); | ||

|

|

||

| PD_REGISTER_KERNEL(feed_dense_tensor, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里T是需要的吗?用GENARAL那个减少一些代码可以吗?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

谢谢,已修改

| private: | ||

| framework::Variable *out_var_; | ||

| const platform::Place &place_; | ||

| framework::Raw out_var_; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里是不是应该用T类型存储值?Raw应该是尽量避免使用的,用any是比较慢的,这个op还是相当高频的

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里无法使用T来存储,这个class有三个operator()重载,每个重载处理不同的out_var类型, 如果是使用T类型,那么out_var的类型是固定的,编译会报错

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已去除Raw,通过拆分FeedVariableVisitor的逻辑来实现

paddle/fluid/jit/layer.cc

Outdated

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<int>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<float>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(std::vector<std::string>) | ||

| PD_SPECIALZE_ATTRIBUTE_TYPE(framework::Strings) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里和feed op的改造有关吗?jit应该是留杰他们单独整的一套吧,attribute类型里原先就有std::vector<std::string>,我们好像不应该把这里改成var系列的,如果真有必要的话,也得是平行再增加

| if (platform::is_same_place(in_tensor.place(), place)) { | ||

| *out = in_tensor; | ||

| } else { | ||

| platform::DeviceContext* context = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里的place就是dev_ctx的place的话,是不是可以直接用输入参数的dev_ctx

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

已修改

|

LGTM for changes to IPU part |

| custom_cpu, | ||

| ALL_LAYOUT, | ||

| paddle::operators::FeedDenseTensorKernel<phi::CustomContext>, | ||

| ALL_DTYPE) {} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

此处只注册了 custom_cpu 的 kernel,如果注册了其他名称的自定义设备(例如现在的科大项目),此处是感知不到的,代码会挂掉吗?

Here only registers custom_cpu kernel, if users have registered custom devices with other name, will these codes fail?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这里注册其他名称的自定义设备也不会有问题,只要跑起来有匹配的对应的backend就行

| } // namespace operators | ||

| } // namespace paddle | ||

|

|

||

| // TODO(YuanRisheng): Maybe we need design a new registry macro for |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这个我们可能需要提高下优先级

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

好的

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

PR types

Others

PR changes

Others

Describe

背景:

执行器的需求,需要将feed和fetch改写成PHI Kernel统一执行调度

本PR将feed_op算子改成PHI Kernel,详细包括以下工作:

1,FeedList继承ExtendedTensor,接入PHI Kernel执行体系

2,改写feed_op算子逻辑为PHI Kernel的形式

3,完善RawTensor功能,提供与GetMutable相匹配的Get接口

4,支持ExtendedTensor输入类型,对注册机制进行扩展

5,为缓存PHI KernelContext机制添加检查验证功能函数

6,重构全局自定义设备类型获取代码

TODO:

1,本PR虽然为是否缓存KernelContext添加了检查功能函数,但并未将代码当中的need_prepare_phi_data_的滥用情况都收到改函数当中,后续PR会完善该检查功能函数,将代码中使用need_prepare_phi_data_的地方收到这个函数里,提高可维护性

2,支持设备无关Kernel单独的注册宏