This is the official repository for the IROS 2025 paper: RGB-Thermal Visual Place Recognition via Vision Foundation Model

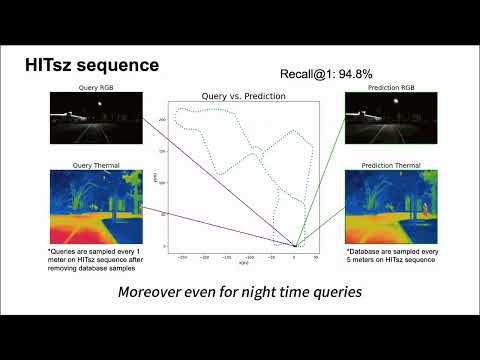

Visual place recognition is a critical component of robust simultaneous localization and mapping systems. Conventional approaches primarily rely on RGB imagery, but their performance degrades significantly in extreme environments, such as those with poor illumination and airborne particulate interference (e.g., smoke or fog), which significantly degrade the performance of RGB-based methods. Furthermore, existing techniques often struggle with cross-scenario generalization. To overcome these limitations, we propose an RGB-thermal multimodal fusion framework for place recognition, specifically designed to enhance robustness in extreme environmental conditions. Our framework incorporates a dynamic RGB-thermal fusion module, coupled with dual fine-tuned vision foundation models as the feature extraction backbone. Experimental results on public datasets and our self-collected dataset demonstrate that our method significantly outperforms state-of-the-art RGB-based approaches, achieving generalizable and robust retrieval capabilities across day and night scenarios.

You can run the quickstart.ipynb to try using our model for visual place recognition.

You can download the checkpoint HERE

Our network is trained and evaluated on the SThReO Dataset. You should first download the dataset HERE.

After that, run the Dataset/STheReO_train_split.ipynb and Dataset/STheReO_test_split.ipynb to split the original dataset and get the .mat file.

Our model use pretrained DINOv2 as backbone, so download pretrained weights of ViT-B/14 size from HERE before training our model.

Then you can start training by following command:

python3 train.py --save_dir /path/to/your/save/directory features_dim 768 --sequences KAIST --foundation_model_path /path/to/pretrained/weights

You can evaluate the performance by following command:

python3 eval.py --resume /path/to/checkpoint --save_dir /path/to/save/directory --sequences SNU --img_time allday --features_dim 768

sequences: choose from {SNU, Valley}img_time: choose from {daytime, nighttime, allday}