-

Notifications

You must be signed in to change notification settings - Fork 482

[fix] Set deepspeed's fp16 auto_cast to false

#279

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

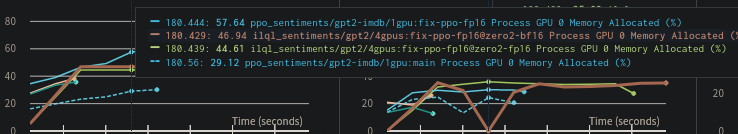

Thanks @reciprocated and @ZHAOTING . Does this increase memory usage? Or are the model weights still being cast to fp16 somewhere else? I see in the report that the bf16 and fp16 runs have about the same but theres a run which seems to have more memory use than the baseline: |

|

No, it doesn't increase the memory usage. For the baseline I took by accident a prior bf16 run, while on the fix is a run without accelerate, so the difference here is between bf16 and fp32 (it's not possible to make a fp16 run on the main). Just in case I made a few more runs with fp32, zero-fp32, zero-fp16, zero-bf16: |

|

Ah okay, got it. This LGTM! |

Hi, I have some related problems. First, without any modification, the code will fail with the following error: And after I delete "mixed_precision" in |

|

Hi! @XWwwwww, I'm able to reproduce the first part, it's related to the newest accelerate release which started to raise errors in this case, however I'm not getting the runtime error you're having. Maybe it's related to some particular versioning (at least that's what a quick google search suggests). Are you having the same problem for other examples as well? |

|

Hi! @reciprocated and my torch version is 1.10.1 |

|

Can you try to upgrade your pytorch version? ref: #264 (comment) |

Fix of #238

https://github.com/huggingface/accelerate/blob/4e5cc0c6b9c28fc569bfc70dd0508225d187fbf9/src/accelerate/utils/dataclasses.py#L527

https://wandb.ai/sorry/trlx/reports/-fix-Set-deepspeed-s-fp16-auto_cast-to-false-279--VmlldzozNDkzMzkx

Thanks @ZHAOTING for finding this fix